We are rapidly approaching the “is there anything large language models can’t do?” stage. They crush the medical licensing exams. They’re more empathic than physicians on Reddit. So, can they objectively help you become a better doctor?

This is again one of those “we locked doctors in a room with a written case description” tests. Physicians (three-quarters attendings, the remainder residents) needed to write out diagnostic and management plans in response to a complex case. Half were set free on their own using “conventional” resources, whilst the remainder received the heavenly power of GPT-4.

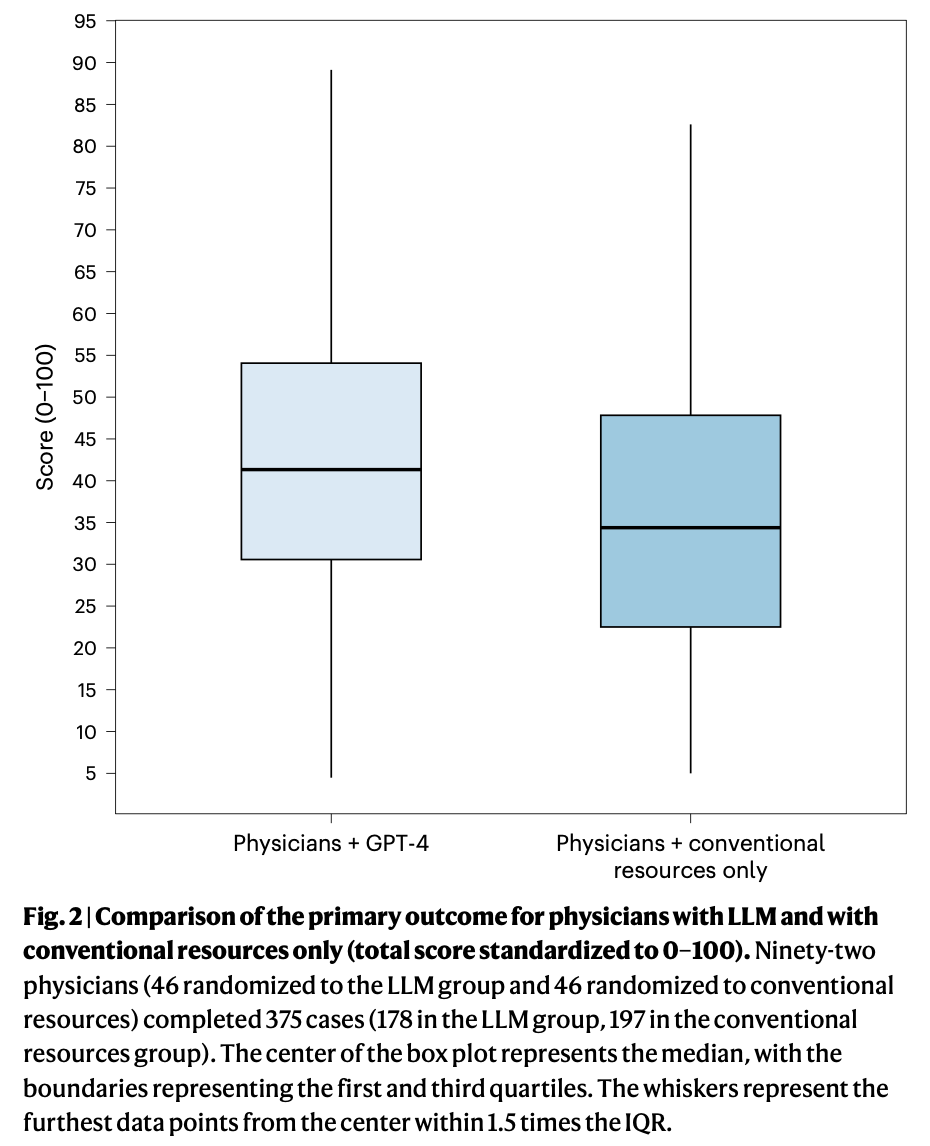

Physicians + GPT-4 win!

Obviously the magnitude of difference is trivial and there are many clinicians who, unaided, scored better than physicians + GPT-4. Also, seriously – how are the whiskers on these plots going all the way down to 5? More than a little bit concerning to see physicians utterly blowing these cases, and it suggests there may be validity issues in either case construction or measurement methods.

The only “loss” is that physicians aided by GPT-4 tended to spend more time per case, but, correspondingly, also provided slightly longer answers.

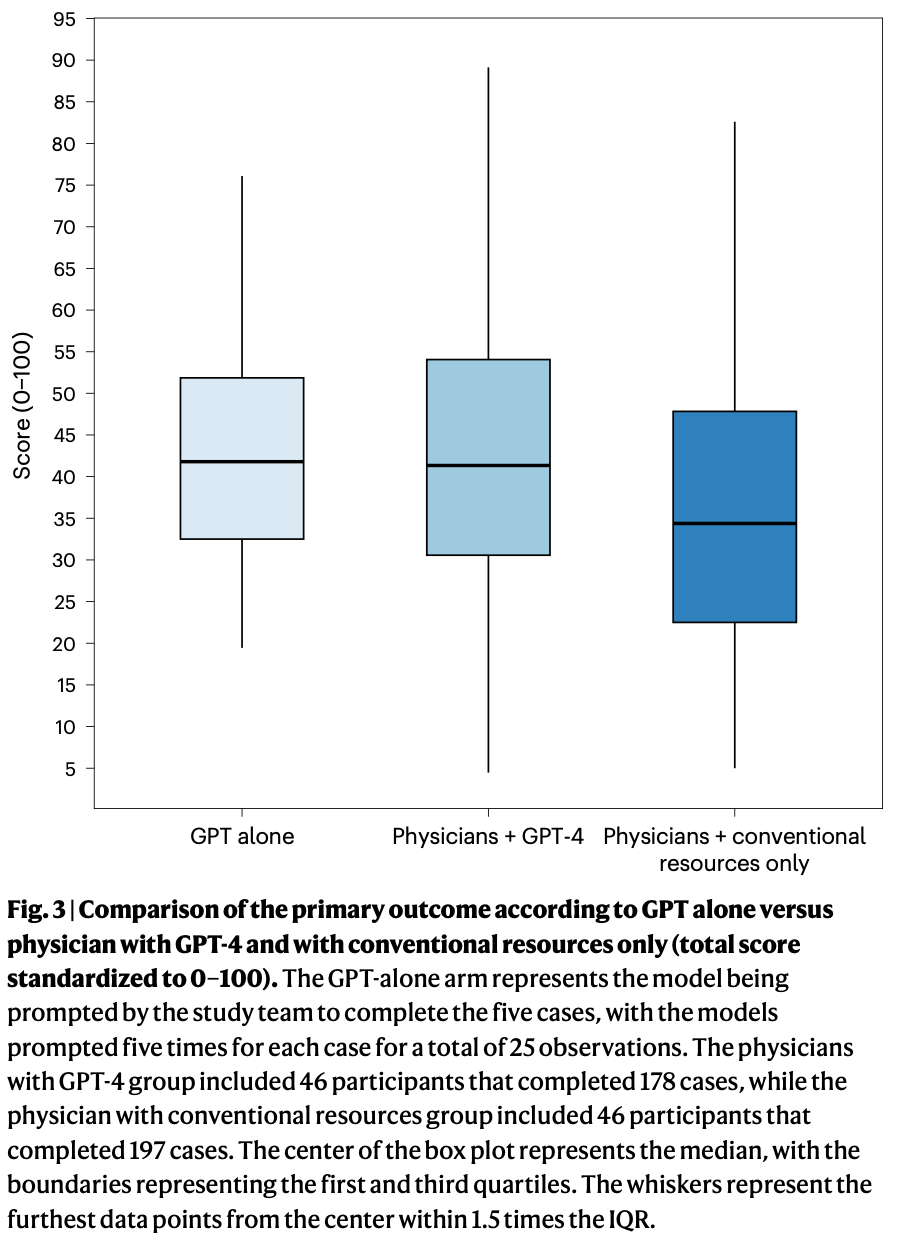

Oh, and then there’s this loss:

In a slightly smaller sample of cases fed through GPT-4 without clinician involvement (interference?), the LLM alone was easily as capable as the augmented clinicians.

Again, this is contrived and carefully orchestrated test of clinical knowledge, and not real-world information gathering and synthesis – but clearly the foundation is there for AI to competently manage cases, in general, when fed the relevant information.